<div class='graph-image'><a href="#1">Hello world 1!</a></div>

<div class='graph-image'><a href="#2">Hello world 2!</a></div>

<div class='graph-image'><a href="#3">Hello world 3!</a></div>

<div class='graph-image'><a href="#4">Hello world 4!</a></div>

<div class='graph-image'><a href="#5">Hello world 5!</a></div>

type(r.json())>>> geo = {'format': 'json', 'lat': f'51.22778325', 'lon': f'51.43176735508452'}

>>> r = requests.get('https://nominatim.openstreetmap.org/reverse', params=geo)

>>> r.json()

{'place_id': 158413949,

'licence': 'Data © OpenStreetMap contributors, ODbL 1.0. https://osm.org/copyright',

'osm_type': 'way', 'osm_id': 227214466,

'lat': '51.22778325',

'lon': '51.43176735508452',

'display_name': '2/3, улица Юрия Гагарина, Уральск, Уральск Г.А., Западно-Казахстанская область, 090005, Қазақстан',

'address': {'house_number': '2/3', 'road': 'улица Юрия Гагарина', 'city': 'Уральск', 'county': 'Уральск Г.А.', 'state': 'Западно-Казахстанская область', 'ISO3166-2-lvl4': 'KZ-ZAP', 'postcode': '090005', 'country': 'Қазақстан', 'country_code': 'kz'},

'boundingbox': ['51.2274636', '51.2280884', '51.4309297', '51.4323584']}

>>> type(r.json())

<class 'dict'>

>>> r.json().keys()

dict_keys(['place_id', 'licence', 'osm_type', 'osm_id', 'lat', 'lon', 'display_name', 'address', 'boundingbox'])

>>>...

>>> r.json().get('address').keys()

dict_keys(['house_number', 'road', 'city', 'county', 'state', 'ISO3166-2-lvl4', 'postcode', 'country', 'country_code'])

......

>>> r.json().get('address').get('city')

'Уральск'

...

sql = "INSERT INTO `companies` "\

"(`tg-id`, `tg-login`, `company-name`, `line-business`, `line-business-id`) "\

"VALUES (%s, %s, %s, %s, %s)"ну и глобальная проблема, у тебя async код, но с базой ты работаешь в синхронном режиме, эти моменты будут всегда блокировать event loop и никакой пользы от async ты не получишь

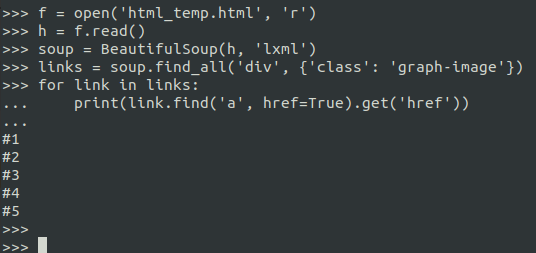

>>> from bs4 import BeautifulSoup

>>> html_chunk = '<meta property="og:description" content="Студенческая ул., 187, Энгельс, Саратовская область" />'

>>> soup = BeautifulSoup(html_chunk)

>>> desc = soup.find("meta", property="og:description")

>>> desc.get("content")

'Студенческая ул., 187, Энгельс, Саратовская область'

>>>

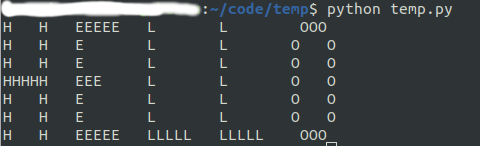

import alfabet_ascii

letters = [alfabet_ascii.H, alfabet_ascii.E,

alfabet_ascii.L, alfabet_ascii.L, alfabet_ascii.O]

row = 0

while row < 7:

line = ""

column = 0

while column < len(letters):

letter = letters[column]

line += letter[row] + " "

column += 1

print(line)

row += 1A = [" A ", " A A ", "A A", "AAAAA", "A A", "A A", "A A"]

B = ["BBBB ", "B B", "B B", "BBBB ", "B B", "B B", "BBBB "]

H = ["H H", "H H", "H H", "HHHHH", "H H", "H H", "H H"]

E = ["EEEEE", "E ", "E ", "EEE ", "E ", "E ", "EEEEE"]

L = ["L ", "L ", "L ", "L ", "L ", "L ", "LLLLL"]

O = [" OOO ", "O O", "O O", "O O", "O O", "O O", " OOO "]

import pickle

with open('filename.pkl', 'rb') as fd:

data = pickle.load(fd)

>>> URL = 'https://blockchain.info/ticker'

>>> response = requests.get(URL)

>>> response.json()

{'AUD': {'15m': 51425.37, 'last': 51425.37, 'buy': 51425.37, 'sell': 51425.37, 'symbol': 'AUD'}, '...}

proxies = get_free_proxies()s = get_session(proxies)def get_free_proxies():

url = "https://free-proxy-list.net/"

# посылаем HTTP запрос и создаем soup объект

soup = bs(requests.get(url).content, "html.parser")

proxies = []

for row in soup.find("table", attrs={"class": "table"}).find_all("tr")[1:]:

tds = row.find_all("td")

try:

ip = tds[0].text.strip()

port = tds[1].text.strip()

host = f"{ip}:{port}"

proxies.append(host)

except IndexError:

continue

return proxies>>> proxies = get_free_proxies()

>>> proxies

['85.195.120.157:1080', '142.82.48.250:80', '58.234.116.197:8193', ..., ....]

...

...

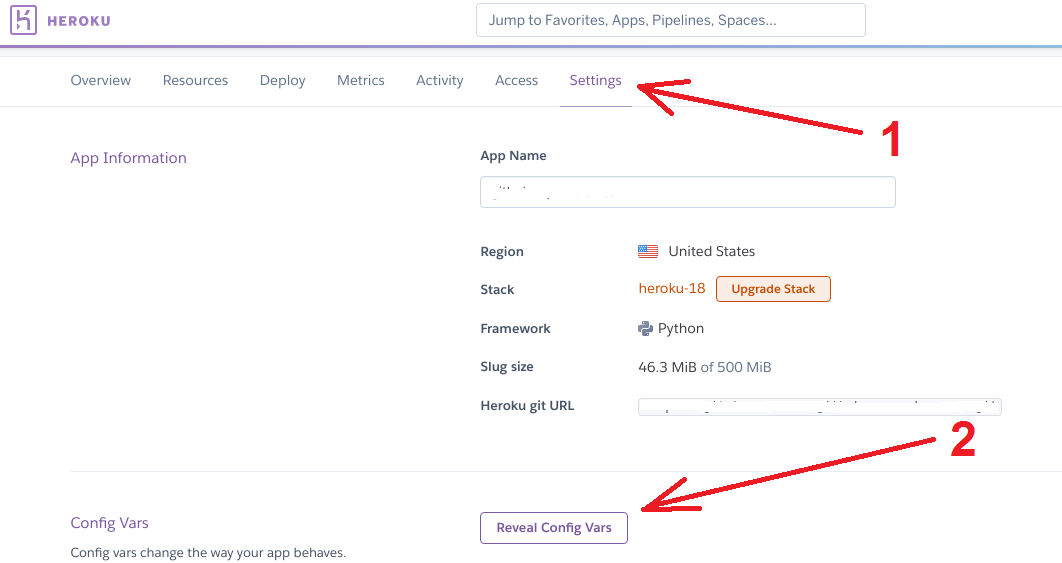

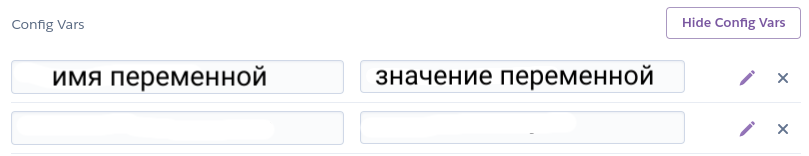

heroku config:set GITHUB_USERNAME=joesmith

Adding config vars and restarting myapp... done, v12

GITHUB_USERNAME: joesmithaccess_token = os.getenv("ACCESS_TOKEN")

...

>>> import requests

>>> headers = {'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, ke Gecko) Chrome/61.0.3163.100 Safari/537.36'}

>>> res = requests.get('https://api.asos.com/product/catalogue/v2/products/12181064?store=ROlang=en-GB¤cy=HKD', headers=headers)

>>> res.status_code

200

>>> res.json()

{'id': 12181064, 'name': 'ASOS DESIGN ruched mini dress with puff sleeves in check print', 'description': '<

...

...

}