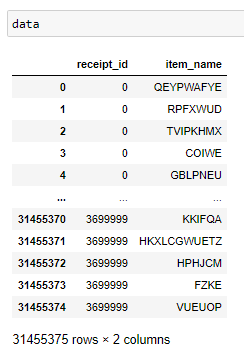

В другом месте предложили вот такой вариант. В принципе работает, но на моём датасете требует 160Гб ОЗУ, чего у меня к сожалению нет

import pandas as pd

from mlxtend.preprocessing import TransactionEncoder

from mlxtend.frequent_patterns import fpgrowth

# Sample data in a similar structure to yours

df = pd.DataFrame({

'reciept_id':[1,1,2,2,3,3],

'reciept_dayofweek':[4,4,5,5,6,6],

'reciept_time':['20:20','20:20','12:13','12:13','11:10','11:10'],

'item_name':['Milk','Onion','Dill','Onion','Milk','Onion']

})

# Create an array of items per transactions

dataset = df.groupby(['reciept_id','reciept_dayofweek','reciept_time'])['item_name'].apply(list).values

# Create the required structure for data to go into the algorithm

te = TransactionEncoder()

te_ary = te.fit(dataset).transform(dataset)

df = pd.DataFrame(te_ary, columns=te.columns_)

# Generate frequent items sets with a support of 1/len(dataset)

# This is the same as saying give me every combination that shows up at least once

# The maximum size of any given itemset is 2, but you could change it to have any number

frequent = fpgrowth(df, min_support=1/len(dataset),use_colnames=True, max_len=2)

# Get rid of single item records

frequent = frequent[frequent['itemsets'].apply(lambda x: len(x))==2]

# Muliply support by the number of transactions to get the count of times each item set appeared

# in the original data set

frequent['frequency'] = frequent['support'] * len(dataset)

# View the results

print(frequent[['itemsets','frequency']])