Как нажать через селениум кнопку

на сайте

https://www.pik.ru/search/vangarden/storehouse ?

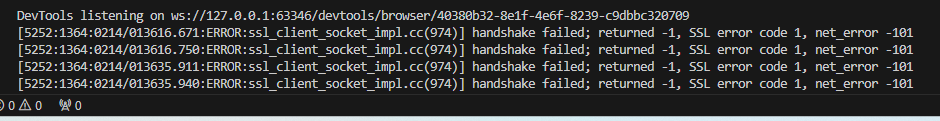

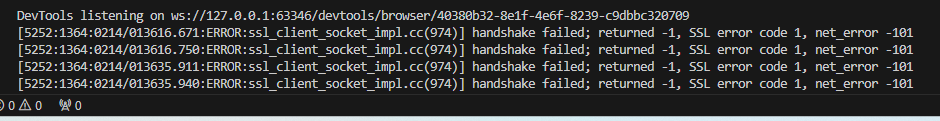

Как это поправить можно? (куда посмотреть)

[8468:12460:0214/095050.752:ERROR:ssl_client_socket_impl.cc(974)] handshake failed; returned -1, SSL error code 1, net_error -101

[8468:12460:0214/095050.866:ERROR:ssl_client_socket_impl.cc(974)] handshake failed; returned -1, SSL error code 1, net_error -101

import requests

from bs4 import BeautifulSoup

import time

import os

from selenium import webdriver

from selenium.webdriver.common.by import By

from selenium.webdriver.common.keys import Keys

url = 'https://www.pik.ru/search/storehouse'

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/121.0.0.0 Safari/537.36 Edg/121.0.0.0'

}

def download_pages_objects(url):

if os.path.isfile(r'C:\Users\kraz1\OneDrive\Рабочий стол\Антон\python\парсинг\кладовочная\pik_links.txt') == True:

os.remove(

r'C:\Users\kraz1\OneDrive\Рабочий стол\Антон\python\парсинг\кладовочная\pik_links.txt')

list_links = []

req = requests.get(url, headers=headers)

soup = BeautifulSoup(req.text, "html5lib")

for i in soup.find_all("a", class_="styles__ProjectCard-uyo9w7-0 friPgx"):

list_links.append('https://www.pik.ru'+i.get('href')+'\n')

list_links = list(set(list_links))

with open(r'C:\Users\kraz1\OneDrive\Рабочий стол\Антон\python\парсинг\кладовочная\pik_links.txt', 'a') as file:

for link in list_links:

file.write(link)

def get_list_objects_links(url):

download_pages_objects(url)

list_of_links = []

with open(r'C:\Users\kraz1\OneDrive\Рабочий стол\Антон\python\парсинг\кладовочная\pik_links.txt', 'r') as file:

for item in file:

list_of_links.append(item)

return list_of_links

def operation(list_links):

# options = webdriver.ChromeOptions()

# options.add_argument('--ignore-certificate-errors-spki-list')

# options.add_argument('--ignore-ssl-errors')

driver = webdriver.Chrome()

driver.get("https://www.pik.ru/search/vangarden/storehouse")

time.sleep(22)

pahe = driver.find_element(By.CLASS_NAME, 'sc-gsnTZi fWJuXR').click()

time.sleep(5)

response = driver.page_source

with open('2.html', 'w') as file:

file.write(response)

soup = BeautifulSoup(response, 'lxml')

print(soup)

for i in (soup.find_all('div', class_='sc-htiqpR fhmJpy')):

print(i, '\n\n\n')

driver.close()

driver.quit()

def main():

list_links = get_list_objects_links(url)

operation(list_links)

if __name__ == '__main__':

main()