scale_percent = 30

image = cv2.imread(captcha)

dim = (image.shape[1] * scale_percent // 100, image.shape[0] * scale_percent // 100)

resized = cv2.resize(image, dim, interpolation = cv2.INTER_AREA)

gray = cv2.cvtColor(resized, cv2.COLOR_BGR2GRAY) #

ret, threshold_image = cv2.threshold(gray, 0, 255, cv2.THRESH_BINARY_INV | cv2.THRESH_OTSU)

threshold_image = np.invert(threshold_image)

import cv2

import matplotlib.pyplot as plt

data = [

['255', '255', '255', '190', '190', '160', '76', '45', '78'],

['255', '255', '255', '190', '190', '160', '76', '45', '78'],

['255', '255', '255', '190', '190', '160', '76', '45', '78']

]

to_chunks = lambda x, n:[x[i*n:i*n+n] for i in range(len(x) // n)]

img = [to_chunks(list(map(int, row)), 3) for row in data]

f,ax = plt.subplots(1,1)

ax.imshow(img)

occurence_count = Counter(map(lambda x:cv2.contourArea(x), contours))

most_common_area = round(occurence_count.most_common(1)[0][0])import cv2

IMAGE = # <path>

image = cv2.imread(IMAGE)

image = image[0:720, 100:1000]

grey = cv2.cvtColor(image, cv2.COLOR_BGR2GRAY)

(thresh, grey) = cv2.threshold(grey, 70, 255, cv2.THRESH_BINARY)

res = cv2.resize(grey,(96, 64), interpolation = cv2.INTER_CUBIC)

for im in res:

out = ''.join(['1' if x else '0' for x in im])

print(out)

The bbox coordinates mentioned here are corresponding to the original images in the CelebA. These are face crops generated some other technique. You can either use the original images or just skip using bbox.

import matplotlib.pyplot as plt

%matplotlib inline

import cv2

from PIL import Image, ImageDraw

import numpy

def get_colors(infile, numcolors=10, swatchsize=20, resize=150):

plt.rcParams['figure.figsize'] = [15, 3]

f,ax = plt.subplots(1,2)

image = Image.open(infile)

orig = image.copy()

image = image.resize((resize, resize))

result = image.convert('P', palette=Image.ADAPTIVE, colors=numcolors)

result.putalpha(0)

colors = result.getcolors(resize*resize)

pal = Image.new('RGB', (swatchsize*numcolors, swatchsize))

draw = ImageDraw.Draw(pal)

posx = 0

for count, col in sorted(colors, key=lambda x: x[0], reverse=True):

draw.rectangle([posx, 0, posx+swatchsize, swatchsize], fill=col)

posx = posx + swatchsize

img = numpy.asarray(pal)

del draw

print('File: ', infile)

for im in sorted(colors, key=lambda x: x[0], reverse=True):

if im[1].index(max(im[1])) == 0:

print(im, 'red')

elif im[1].index(max(im[1])) == 1:

print(im, 'green')

elif im[1].index(max(im[1])) == 2:

print(im, 'blue')

ax[0].imshow(orig)

ax[1].imshow(img)

if __name__ == '__main__':

get_colors('D:\\00\\sample01.jpg', numcolors=1)

get_colors('D:\\00\\sample02.jpg', numcolors=1)

get_colors('D:\\00\\sample03.jpg', numcolors=1)

cmake_minimum_required(VERSION 2.8)

project( example )

find_package( OpenCV REQUIRED )

include_directories( ${OpenCV_INCLUDE_DIRS} )

add_executable( example example.cpp )

target_link_libraries( example ${OpenCV_LIBS} )cmake .

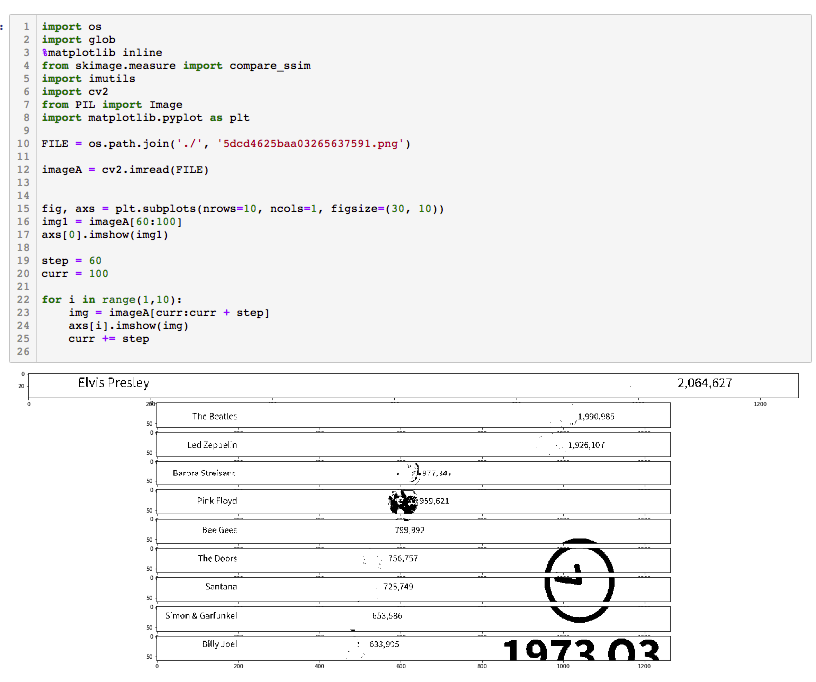

makeimport os

import glob

%matplotlib inline

from skimage.measure import compare_ssim

import imutils

import cv2

from PIL import Image

import matplotlib.pyplot as plt

FILE = os.path.join('./', '5dcd4625baa03265637591.png')

imageA = cv2.imread(FILE)

fig, axs = plt.subplots(nrows=10, ncols=1, figsize=(30, 10))

img1 = imageA[60:100]

axs[0].imshow(img1)

step = 60

curr = 100

for i in range(1,10):

img = imageA[curr:curr + step]

axs[i].imshow(img)

curr += step

import cv2

import time

import numpy as np

import pyscreenshot as ImageGrab

import pyautogui

def find_patt(image, patt, thres):

img_grey = cv2.cvtColor(image, cv2.COLOR_BGR2GRAY)

(patt_H, patt_W) = patt.shape[:2]

res = cv2.matchTemplate(img_grey, patt, cv2.TM_CCOEFF_NORMED)

loc = np.where(res>thres)

return patt_H, patt_W, zip(*loc[::-1])

if __name__ == '__main__':

screenshot = ImageGrab.grab()

img = np.array(screenshot.getdata(), dtype='uint8').reshape((screenshot.size[1],screenshot.size[0],3))

patt = cv2.imread('butt01.png', 0)

h,w,points = find_patt(img, patt, 0.60)

if len(points)!=0:

pyautogui.moveTo(points[0][0]+w/2, points[0][1]+h/2)

pyautogui.click()