# my global config

global:

scrape_interval: 5s # Set the scrape interval to every 15 seconds. Default is every 1 minute.

evaluation_interval: 15s # Evaluate rules every 15 seconds. The default is every 1 minute.

# scrape_timeout is set to the global default (10s).

# Alertmanager configuration

alerting:

alertmanagers:

- static_configs:

- targets:

- 'oncall_alertmanager_1:9093'

rule_files:

- $PWD/alert.rules.ymlgroups:

- name: alert.rules

rules:

- alert: InstanceDown

expr: up == 0

for: 1m

labels:

severity: "critical"

annotations:

summary: "Endpoint {{ $labels.instance }} down"

description: "{{ $labels.instance }} of job {{ $labels.job }} has been down for more than 1 minutes."

- alert: HostOutOfMemory

expr: node_memory_MemAvailable / node_memory_MemTotal * 100 < 25

for: 5m

labels:

severity: warning

annotations:

summary: "Host out of memory (instance {{ $labels.instance }})"

description: "Node memory is filling up (< 25% left)\n VALUE = {{ $value }}\n LABELS: {{ $labels }}"

- alert: HostOutOfDiskSpace

expr: (node_filesystem_avail{mountpoint="/"} * 100) / node_filesystem_size{mountpoint="/"} < 50

for: 1s

labels:

severity: warning

annotations:

summary: "Host out of disk space (instance {{ $labels.instance }})"

description: "Disk is almost full (< 50% left)\n VALUE = {{ $value }}\n LABELS: {{ $labels }}"

- alert: HostHighCpuLoad

expr: (sum by (instance) (irate(node_cpu{job="node_exporter_metrics",mode="idle"}[5m]))) > 80

for: 5m

labels:

severity: warning

annotations:

summary: "Host high CPU load (instance {{ $labels.instance }})"

description: "CPU load is > 80%\n VALUE = {{ $value }}\n LABELS: {{ $labels }}"version: '3'

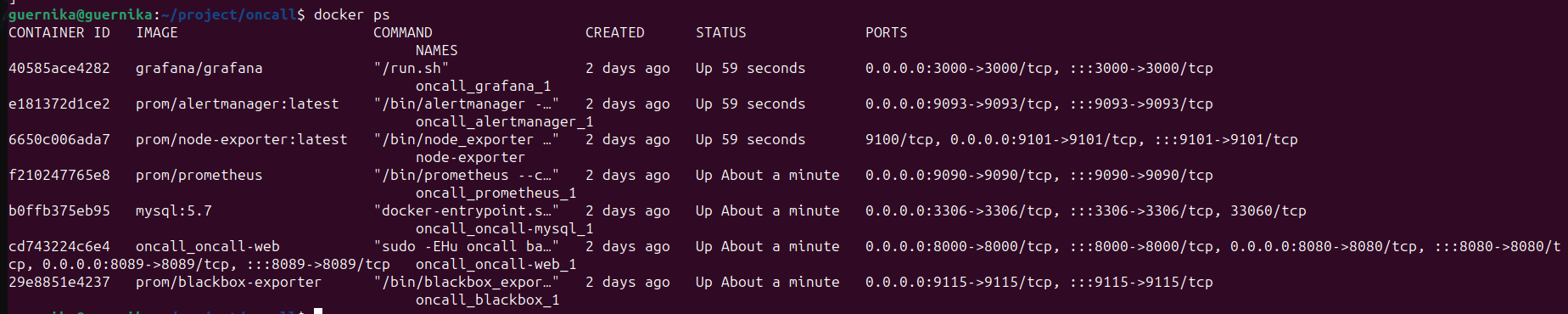

services:

prometheus:

image: prom/prometheus

volumes:

- $PWD/prometheus.yml:/etc/prometheus/prometheus.yml

ports:

- "9090:9090"

depends_on:

- cadvisor

- blackbox

networks:

- iris

alertmanager:

image: prom/alertmanager:latest

volumes:

- ./alertmanager.yml:/etc/alertmanager/alertmanager.yml

ports:

- 9093:9093

depends_on:

- prometheus

networks:

- iris

rule_files:

- "/etc/prometheus/aler-rules/test.rules"

- "test.rules"

alerting:

alertmanagers:

- static_configs:

- targets:

- 'oncall_alertmanager_1:9093'

prometheus:

image: prom/prometheus

volumes:

- $PWD/prometheus.yml:/etc/prometheus/prometheus.yml

- $PWD/aler-rules/test.rules:/etc/prometheus/alert-rules/test.rules

ports:

- "9090:9090"

depends_on:

- cadvisor

- blackbox

- alertmanager

networks:

- iris

alertmanager:

image: prom/alertmanager:latest

volumes:

- ./alertmanager.yml:/etc/alertmanager/alertmanager.yml

ports:

- 9093:9093

networks:

- iris

groups:

- name: test_rules

rules:

- record: scrape_samples

- alert: PrometheusJobMissing

expr: absent(up{job="prometheus"})

for: 0m

labels:

severity: warning

annotations:

summary: Prometheus job missing (instance {{ $labels.instance }})

description: "A Prometheus job has disappeared\n VALUE = {{ $value }}\n LABELS = {{ $labels }}"

rule_files:

- $PWD/alert.rules.ymlvolumes:

- $PWD/prometheus.yml:/etc/prometheus/prometheus.yml$PWD здесь может быть, но её надо предварительно определить, например в .env файле или в одном из семи мест, где их можно определять.# Load and evaluate rules in this file every 'evaluation_interval' seconds.

rule_files:

- '/etc/prometheus/alert-rules/*'

# - 'first.rules'

# - 'second.rules'volumes:

- ./prometheus:/etc/prometheus